Thank you for the 500+ responses!

I want to start off by thanking the survey respondents who took the time to make their way through the images and provided their selections. The response was overwhelming and far greater than I had expected. I was expecting ~100 responses, which would have provided a rather wide margin of error, but the survey blew through that in less than 12 hours. Within a span of a few days, the survey concluded with 505 responses, which provides for a nice and narrow margin of error.

The survey concluded with 505 responses, which provides for a nice and narrow margin of error.

I’d also like to thank the people who commented on the posts on DPReview and on the facebook groups. I had consulted a few experts before launching the survey and was warned several times that I should expect to be crucified for attempting this. The actual experience did include some of that, but most people were curious, insightful, and supportive.

For background and context regarding the survey, please refer to the original post at https://fcracer.com/does-camera-sensor-size-matter/.

My personal bias going into the survey

From the start, my goal was to be transparent throughout the process of how the images were taken, how they were processed, and how they were used in the survey. I also want to be transparent about any potential personal bias I may have had when building the survey. I own APSC, Full Frame, and Medium Format sensor cameras and love them all. The biggest step change I experienced in image quality came from the Medium Format GFX, likely due to the extra resolution that 50MP provides versus 24MP in my other format cameras.

Going into designing the survey, I conducted extensive research online to see what others had done before in evaluating sensor size and its impact on image quality. There is no doubt that the larger sensor, all else held equal, provides for better image quality; there is no debate around that, and that was not what I wanted to test with this survey. Where debate continues is whether the sensor size can be seen in images that are viewed on commonly used desktop screens, tablets, and mobile phones, and by extension, smaller format printed medium.

When doing the research, I found that the discussion tended to evolve towards two groups of thinking, with strong views on both sides:

- The first group believes strongly that sensor size is not visible in images on anything smaller than a high resolution 4-5k screen or A3+ print size. This is perhaps the more commonly held position, at least based on the number of posts from this perspective.

- The second group believes equally strongly that you can see an impact from sensor size on smaller images, with some able to see it down to Instagram size. There is a common phrase that people use, citing the “Medium Format Look”.

Going into the survey, I was firmly in the first group; I’ve compared A3+ sized printed output on a Canon Pro-100 printer between the APSC X-Pro2 and the Medium Format GFX and didn’t see much of a difference. On a 2017 5k iMac screen, the GFX images seem to look better, but I felt that was more down to RAG Syndrome (Recently Acquired Gear Syndrome), where my latest acquisition always seems to look the best and my previous gear seems to drop down a rank.

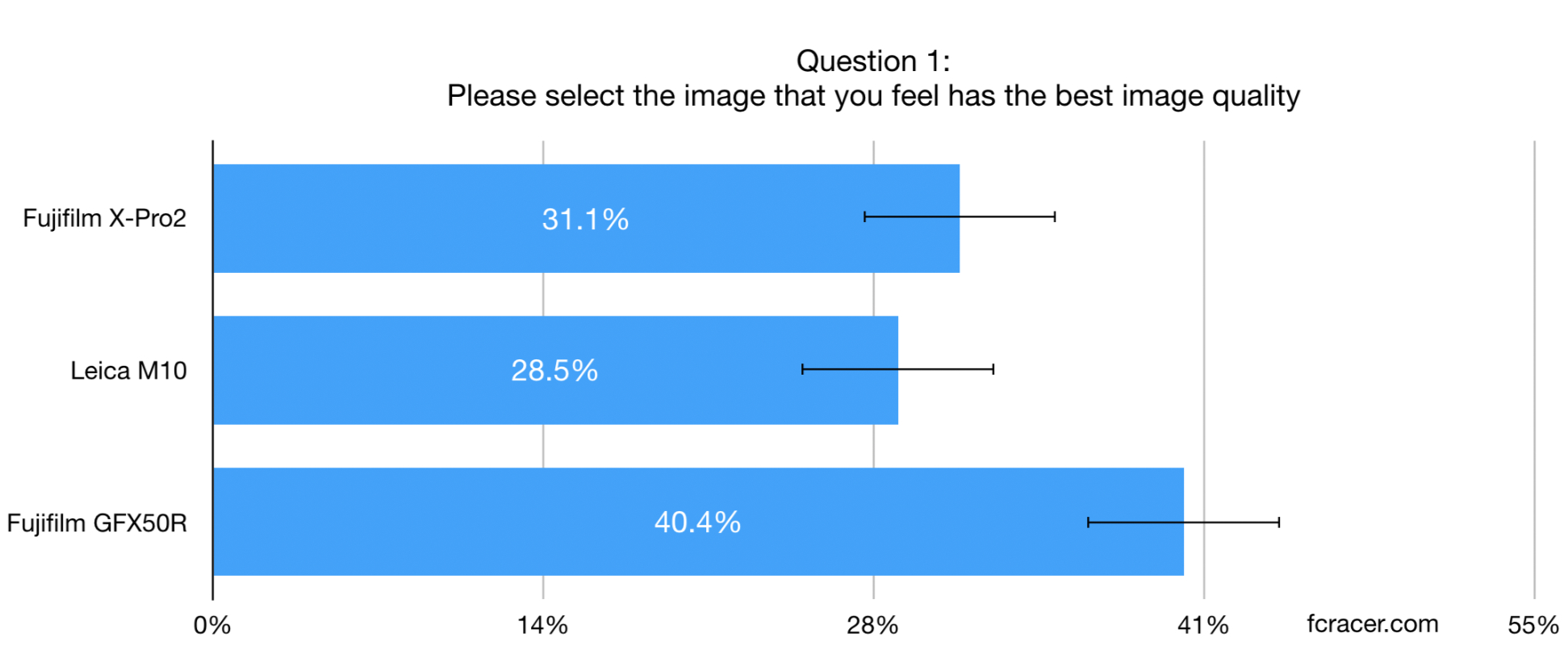

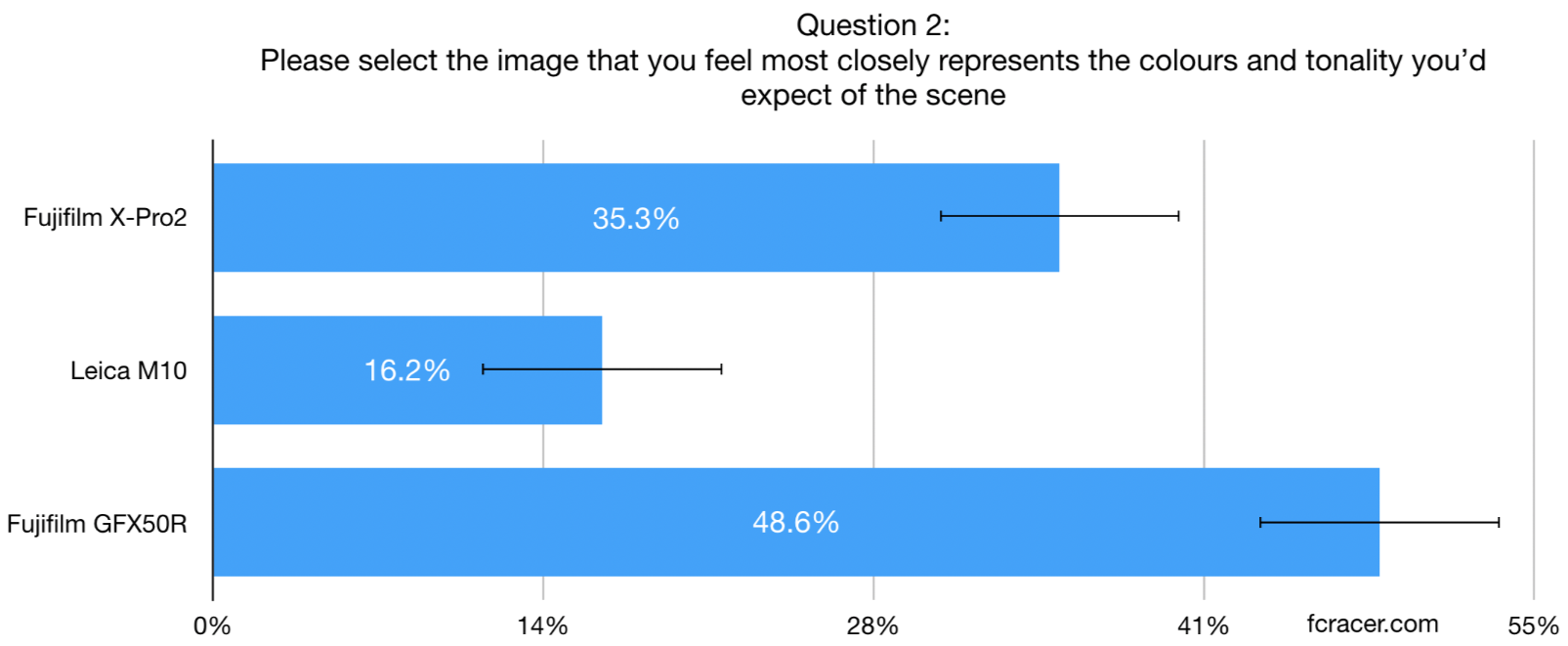

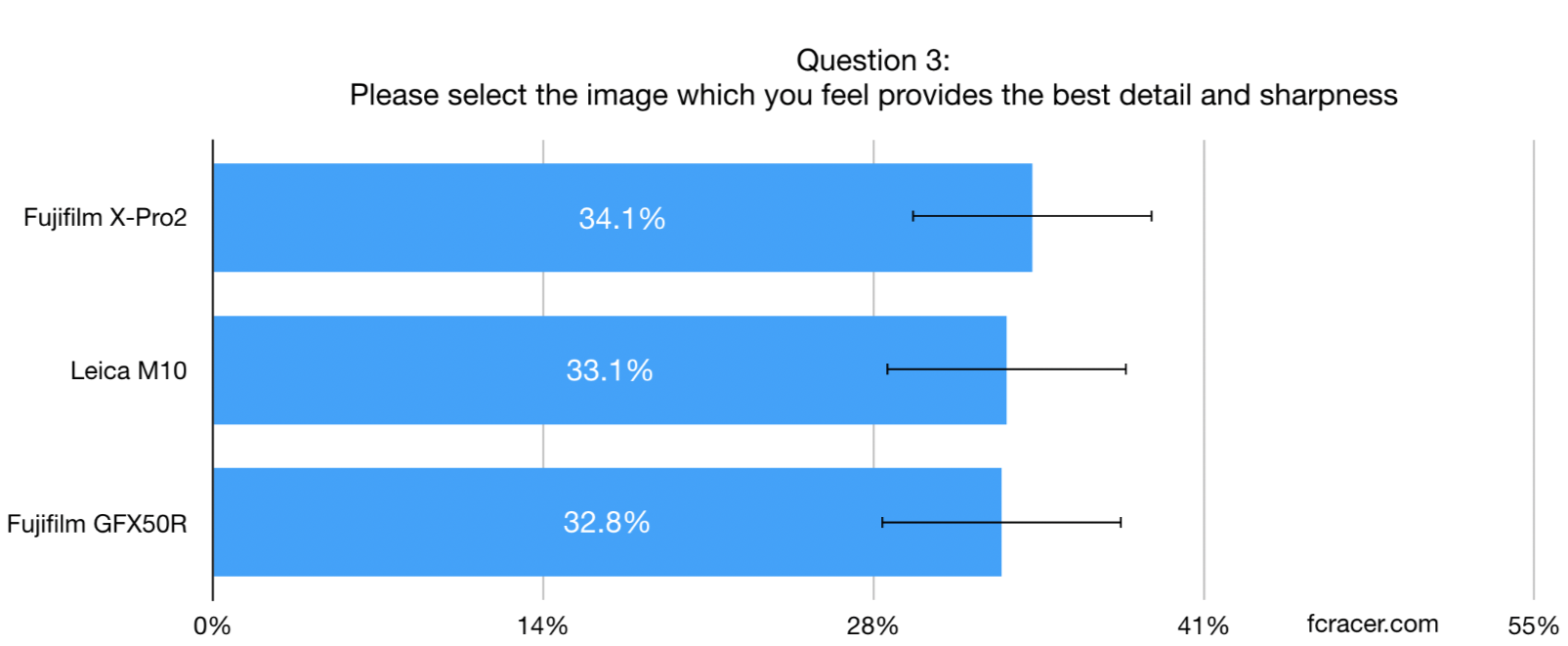

Therefore, I expected Question 1 (overall quality) to show no statistically significant difference, Question 2 (best colour) to have large variance and show statistically significant difference, and Question 3 (detail/sharpness) to show no statistically significant difference.

Survey technical details

Before we dive into the results, let’s go through the technical details first. The survey had 505 responses for the first question, 488 for the second, and 466 for the third. With 505 responses, the margin of error at the 95% confidence level is +/- 4%. For 488 and 466 responses, the margin of errors is +/- 5%. The bottom line is that, for the difference to be statistically significant, we’re looking for a difference of 8pp or more for the first question and 10pp or more for the second and third questions.

The full results

In the survey, the images were randomly placed, so they bear no relation to the image numbers noted here. Behind the scenes, 1.jpg was from the X-Pro2, 2.jpg was from the M10, and 3.jpg was from the GFX.

I am rather surprised at the results of the survey as they’re counter to my own beliefs and experiences.

Question 1 – Overall quality

Question 1 was intended to test for the “Best overall quality” of the image. If there’s such a thing as a “Medium Format Look”, it should appear here. Much to my surprise, it did. The GFX image exceeded the 4% margin of error. However, it’s not as clear cut as one would like, and is perhaps why there is such vigorous debate on this point. If sensor size has a look, we’d expect the M10 to also have a gap on the X-Pro2 beyond the 4% margin of error, but it did not, with both well within the margin of error.

Question 2 – Colour

Question 2 was the one that people were most vocal about because colour is so subjective. That was actually the intent of this question. I included a highly subjective question as a control. Because it’s so subjective and so difficult to match colours exactly, we would expect to see a large variance between the three images well beyond the margin of error. That’s exactly what the result was, which gives me some comfort that the survey responses were not doctored or manipulated somehow. One thing was clear, people did not like the colours that were post-processed into the M10 image!

Question 3 – Detail and Sharpness

Question 3 was intended to test whether detail and sharpness can be differentiated when the images are resampled to a consistent size. Many people rightfully pointed out that the GFX loses its main advantage by being reduced to 18MP; however, the intent was to see if people can tell a difference when viewed on devices that are typically lower resolution than the native images. The result here was what I had expected with there being no significant difference between the images, with all three falling within the margin of error.

Conclusion

One survey with a set of three images is never going to provide conclusive evidence that we can write a book on, but it does provide for some interesting further avenues to explore. As is often the case with research, trying to answer one question creates several more. Some questions or theories I’d love to try and answer in future surveys:

- Why did the GFX image get selected as higher quality when the M10 image did not have the same differential to the X-Pro2? Is it due to the post processing, especially colour since the GFX also came out ahead in Question 2? Is it the way the lenses render? Is there really a “Medium Format Look” that Full Frame just doesn’t have?

- As discussion started to pour in after the launch of the survey, it became apparent that the viewing device played a big role in how people felt about taking the survey. In the future, I’d like to ask the question up front so that the results can be segregated by viewing device.

- When I post images from the GFX on Instagram, they don’t look as good as those from the X-Pro2 or M10; I believe this is due to the algorithm losing edge detail when downsampling from the 50MP image. If I resize to 2048px beforehand and sharpen for screen output, it has a marked impact on the perceived detail. I would like to ask Question 3 again with some sharpening applied at the 18MP output resolution of the survey to see if the GFX would move past the other two images, which are much closer to their native output resolution.

- Due to where the survey was posted, the survey respondents are assumed to be photography enthusiasts or professionals. Would the results be any different if the survey was sent to a completely random cross-section of the public? I looked into the cost of buying such a sample of responses, with a +/- 5% margin of error, but the cost is rather high at $365. I’d rather put that money towards the GF100-200, but I’m tempted to spend the money and round out the survey with that information.

Even though it’s only one survey, I will definitely be more open-minded the next time someone talks about being able to see the “Medium Format Look”. Correlation does not equal causation as they say, but if the results of a survey make us more open to potentially seeing something new, it can’t be all bad right?

One survey with a set of three images is never going to provide conclusive evidence that we can write a book on.

I always appreciate hearing from you and I’d love to hear your thoughts on the survey results. Are the results what you expected? Do you see different outcomes or conclusions from the data than what I’ve noted above? What do you think drove the difference in Question 1? If you were to do the survey again, what would you do differently? For a future survey, what would you like to see compared?

Thank you again for taking the time to participate in the survey, I hope that you’ve found it of some use. Below, I’ve shared the RAW files, the Capture One EIP files, and the exact JPEGs used in the survey.

Image 1 from the Fujifilm X-Pro2 & XF23MM F2 lens

RAW file (24.6MB), Capture One EIP (25.1MB), 18MP JPEG file used in the survey (1.5MB)

Image 2 from the Leica M10 & 35MM Summilux FLE lens

RAW file (29.2MB), Capture One EIP (29.8MB), 18MP JPEG file used in the survey (1.6MB)

Image 3 from the Fujifilm GFX50R & GF45MM F2.8 lens

RAW file (49.8MB), Capture One EIP (50.2MB), 18MP JPEG file used in the survey (1.5MB)

Discover more from fcracer - Travel & Photography

Subscribe to get the latest posts sent to your email.

I think people are too focused on gear. I prefer to be focused on composition and story telling, that’s where the long term value is. Capture moments and memories and presenting them in a pleasing way. You can do that with a cell phone all the way up to a Capture One camera. I think technical specs only make a difference if you are a commercial photographer and have specific needs and it will be a business expense write off anyway. For non-commercial photographers, I think it matters if you are looking at shooting very specific types of photography like fast paced sports. But I would dare say that the vast majority of people doing photography today are essentially doing it for likes on Instagram. And most of the appearance of photos on Instagram comes from heavy editing in Photoshop for example. It’s not the camera sensor that makes the photos look “out of this world”. It’s the endless layers of editing in Photoshop. When you look at raw files, they pretty much look the same to me. Color rendition from the lense, stops of dynamic range, etc all play a factor in making a choice of camera or lense but guess what? My technically perfect picture with hours of editing get a few hundred likes. The cell phone selfie of a “lifestyle” girl in tights with a quick VSCO filter added get 30k likes. Does it really matter in the end? Only you can answer what’s important to you but I firmly believe hype and mass marketing by camera companies is what influences people’s decisions. Not common sense. Cameras are like Supreme clothing. All hype past a certain point.